常用软件安装

常用软件安装

Table of Contents generated with DocToc (opens new window)

# 安装Tomcat

拉取9.0.63

docker pull tomcat:9.0.63-jdk8-openjdk-slim

启动容器

[root@localhost ~]# docker run -d -p 8080:8080 --name tomcat9 tomcat:9.0.63-jdk8-openjdk-slim

aa1bff62aa568887cd8611c4922a1ba8cd415c221cd7577af7c5ba3dce0e6935

2

进入容器 把webapp.dist中的文件复制到webapp中

[root@localhost ~]# docker exec -it aa1bff62aa568887cd8611c4922a1ba8cd415c221cd7577af7c5ba3dce0e6935 /bin/bash

root@aa1bff62aa56:/usr/local/tomcat# ls

BUILDING.txt LICENSE README.md RUNNING.txt conf logs temp webapps.dist

CONTRIBUTING.md NOTICE RELEASE-NOTES bin lib native-jni-lib webapps work

root@aa1bff62aa56:/usr/local/tomcat# cp -r webapps.dist/* webapps

root@aa1bff62aa56:/usr/local/tomcat# cd webapps

root@aa1bff62aa56:/usr/local/tomcat/webapps# ls

ROOT docs examples host-manager manager

2

3

4

5

6

7

8

再访问,就不是404了

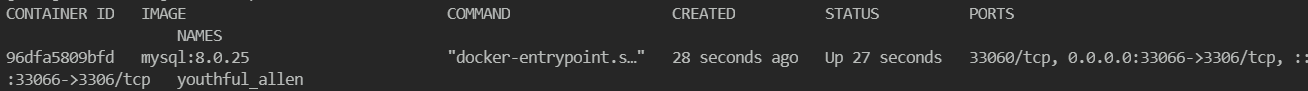

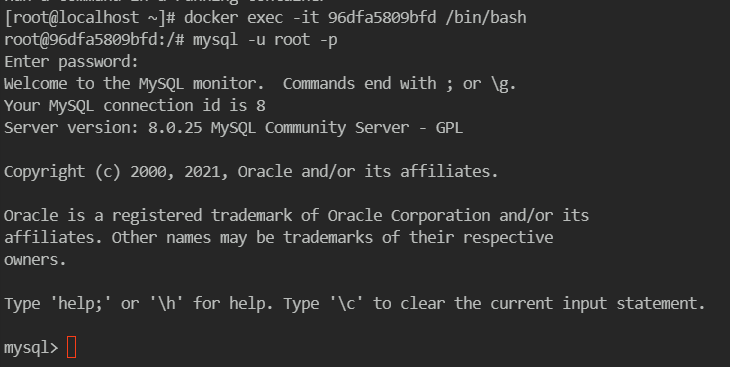

# 安装MySQL

# 简单版

拉取

docker pull mysql:8.0.25

启动

[root@localhost ~]# docker run -p 33066:3306 -e MYSQL_ROOT_PASSWORD=root -d mysql:8.0.25

6fa14e9ba3b17e0507d911636e76093c2c5c03ad62f163b9886a5b3315176379

2

进入容器

[root@localhost ~]# docker exec -it 6fa14e9ba3b1 /bin/bash

连接上mysql

mysql -u root -p 输入上面的密码

成功进入

建数据库建表测试

mysql> create database test;

Query OK, 1 row affected (0.00 sec)

mysql> use test

Database changed

mysql> create table test(id int,name varchar(20));

Query OK, 0 rows affected (0.03 sec)

mysql> insert into test values(1,'test');

Query OK, 1 row affected (0.01 sec)

mysql> select * from test;

+------+------+

| id | name |

+------+------+

| 1 | test |

+------+------+

1 row in set (0.00 sec)

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

# 挂载数据卷版

运行容器并挂载数据卷

docker run -d -p 33066:3306 --privileged=true -v /zdkuse/mysql/log:/var/log/mysql -v /zdkuse/mysql/data:/var/lib/mysql -v /zdkuse/mysql/conf:/etc/mysql.conf.d -e MYSQL_ROOT_PASSWORD=root --name newmysql mysql:8.0.25

新建my.cnf文件

cd /zdkuse/mysql/conf

vim my.cnf

2

[client]

default_character_set=utf8mb4

[mysqld]

collation_server = utf8mb4_general_ci

character_set_server = utf8mb4

2

3

4

5

# 安装Redis

拉镜像

docker pull redis:6.0.8

将一个模板redis.conf拷贝到数据卷本机目录下

文件为 /zdkuse/redis/redis.conf

修改内容

requirepass 密码

注释掉 # bind 127.0.0.1

将daemonize yes注释起来或者 daemonize no设置,因为该配置和docker run中-d参数冲突,会导致容器一直启动失败

开启redis数据持久化 appendonly yes 可选

运行

docker run -p 6378:6379 --name myredis --privileged=true -v /zdkuse/redis/redis.conf:/etc/redis/redis.conf -v /zdkuse/redis/data:/data -d redis:6.0.8 redis-server /etc/redis/redis.conf

注意,这里有大坑,redis.conf这个文件,不能有空行的" "空格,否则运行报错

# 部署Redis集群

1~2亿条数据需要缓存,请问如何设计这个存储案例

使用分布式存储

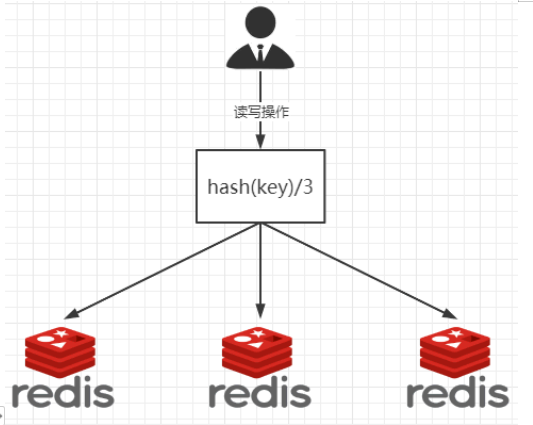

# 分布式存储-哈希取余算法

2亿条记录就是2亿个k,v,我们单机不行必须要分布式多机,假设有3台机器构成一个集群,用户每次读写操作都是根据公式:

hash(key) % N个机器台数,计算出哈希值,用来决定数据映射到哪一个节点上。

优点:

简单粗暴,直接有效,只需要预估好数据规划好节点,例如3台、8台、10台,就能保证一段时间的数据支撑。使用Hash算法让固定的一部分请求落到同一台服务器上,这样每台服务器固定处理一部分请求(并维护这些请求的信息),起到负载均衡+分而治之的作用。

缺点:

原来规划好的节点,进行扩容或者缩容就比较麻烦了,不管扩缩,每次数据变动导致节点有变动,映射关系需要重新进行计算,在服务器个数固定不变时没有问题,如果需要弹性扩容或故障停机的情况下,原来的取模公式就会发生变化:Hash(key)/3会变成Hash(key) /?。此时地址经过取余运算的结果将发生很大变化,根据公式获取的服务器也会变得不可控。某个redis机器宕机了,由于台数数量变化,会导致hash取余全部数据重新洗牌。

# 一致性哈希算法

提出一致性Hash解决方案。 目的是当服务器个数发生变动时, 尽量减少影响客户端到服务器的映射关系

# 部署3主3从redis实例

运行启动

docker run -d --name redis-node-1 --net host --privileged=true -v /data/redis/share/redis-node-1:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6381

docker run -d --name redis-node-2 --net host --privileged=true -v /data/redis/share/redis-node-2:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6382

docker run -d --name redis-node-3 --net host --privileged=true -v /data/redis/share/redis-node-3:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6383

docker run -d --name redis-node-4 --net host --privileged=true -v /data/redis/share/redis-node-4:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6384

docker run -d --name redis-node-5 --net host --privileged=true -v /data/redis/share/redis-node-5:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6385

docker run -d --name redis-node-6 --net host --privileged=true -v /data/redis/share/redis-node-6:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6386

2

3

4

5

6

7

8

9

10

11

[root@localhost /]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

8b737aeb68ce redis:6.0.8 "docker-entrypoint.s…" 6 seconds ago Up 5 seconds redis-node-6

36a789dcc763 redis:6.0.8 "docker-entrypoint.s…" 10 seconds ago Up 9 seconds redis-node-5

3fddc5bb53cb redis:6.0.8 "docker-entrypoint.s…" 13 seconds ago Up 13 seconds redis-node-4

20c8f7d45f12 redis:6.0.8 "docker-entrypoint.s…" 17 seconds ago Up 17 seconds redis-node-3

7b926dc255f3 redis:6.0.8 "docker-entrypoint.s…" 24 seconds ago Up 23 seconds redis-node-2

1908bc53858d redis:6.0.8 "docker-entrypoint.s…" 28 seconds ago Up 27 seconds redis-node-1

2

3

4

5

6

7

8

# 构建主从关系

进入node1

docker exec -it redis-node-1 /bin/bash

构建关系(ip是宿主机ip)

redis-cli --cluster create 211.69.238.105:6381 211.69.238.105:6382 211.69.238.105:6383 211.69.238.105:6384 211.69.238.105:6385 211.69.238.105:6386 --cluster-replicas 1

--cluster-replicas 1 表示为每个master创建一个slave节点

出现以下算是成功

[root@localhost /]# docker exec -it redis-node-1 /bin/bash

root@localhost:/data# redis-cli --cluster create 211.69.238.105:6381 211.69.238.105:6382 211.69.238.105:6383 211.69.238.105:6384 211.69.238.105:6385 211.69.238.105:6386 --cluster-replicas 1

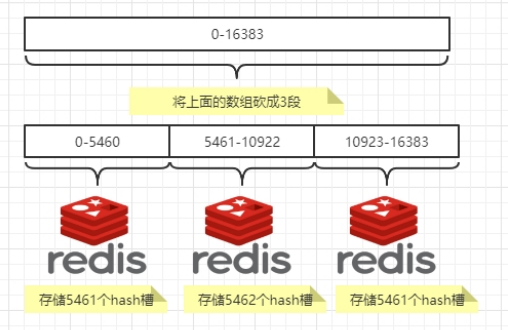

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 211.69.238.105:6385 to 211.69.238.105:6381

Adding replica 211.69.238.105:6386 to 211.69.238.105:6382

Adding replica 211.69.238.105:6384 to 211.69.238.105:6383

>>> Trying to optimize slaves allocation for anti-affinity

[WARNING] Some slaves are in the same host as their master

M: 14492903ed96cef3d9e58b52d5affd27ba88edf0 211.69.238.105:6381

slots:[0-5460] (5461 slots) master

M: 99b74bdf77893a6353e4f852de3b35e2550e93ed 211.69.238.105:6382

slots:[5461-10922] (5462 slots) master

M: ec222c92f9ba2d11e1be3726981a9a66327cb13a 211.69.238.105:6383

slots:[10923-16383] (5461 slots) master

S: 5ff81b524030e5a58aa53802aa35f6e43e7eef6c 211.69.238.105:6384

replicates 99b74bdf77893a6353e4f852de3b35e2550e93ed

S: d62be6d855c50b9c3bb2216230382e4e2b594622 211.69.238.105:6385

replicates ec222c92f9ba2d11e1be3726981a9a66327cb13a

S: ea4b2fd2676945eba8a283b75e9ba6c58043d75b 211.69.238.105:6386

replicates 14492903ed96cef3d9e58b52d5affd27ba88edf0

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

.

>>> Performing Cluster Check (using node 211.69.238.105:6381)

M: 14492903ed96cef3d9e58b52d5affd27ba88edf0 211.69.238.105:6381

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: ea4b2fd2676945eba8a283b75e9ba6c58043d75b 211.69.238.105:6386

slots: (0 slots) slave

replicates 14492903ed96cef3d9e58b52d5affd27ba88edf0

S: d62be6d855c50b9c3bb2216230382e4e2b594622 211.69.238.105:6385

slots: (0 slots) slave

replicates ec222c92f9ba2d11e1be3726981a9a66327cb13a

M: ec222c92f9ba2d11e1be3726981a9a66327cb13a 211.69.238.105:6383

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 5ff81b524030e5a58aa53802aa35f6e43e7eef6c 211.69.238.105:6384

slots: (0 slots) slave

replicates 99b74bdf77893a6353e4f852de3b35e2550e93ed

M: 99b74bdf77893a6353e4f852de3b35e2550e93ed 211.69.238.105:6382

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

# 查看信息

通过6381这个结点查看集群信息:

redis-cli -p 6381

cluster info

2

root@localhost:/data# redis-cli -p 6381

127.0.0.1:6381> cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:6

cluster_my_epoch:1

cluster_stats_messages_ping_sent:264

cluster_stats_messages_pong_sent:263

cluster_stats_messages_sent:527

cluster_stats_messages_ping_received:258

cluster_stats_messages_pong_received:264

cluster_stats_messages_meet_received:5

cluster_stats_messages_received:527

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

可以看到总结点个数以及从机的数量

通过下面的命令,查看具体的主、从机分别是哪个服务

cluster nodes

127.0.0.1:6381> cluster nodes

ea4b2fd2676945eba8a283b75e9ba6c58043d75b 211.69.238.105:6386@16386 slave 14492903ed96cef3d9e58b52d5affd27ba88edf0 0 1654606867000

d62be6d855c50b9c3bb2216230382e4e2b594622 211.69.238.105:6385@16385 slave ec222c92f9ba2d11e1be3726981a9a66327cb13a 0 1654606867000

ec222c92f9ba2d11e1be3726981a9a66327cb13a 211.69.238.105:6383@16383 master - 0 1654606867662 3 connected 10923-16383

5ff81b524030e5a58aa53802aa35f6e43e7eef6c 211.69.238.105:6384@16384 slave 99b74bdf77893a6353e4f852de3b35e2550e93ed 0 1654606868688

99b74bdf77893a6353e4f852de3b35e2550e93ed 211.69.238.105:6382@16382 master - 0 1654606869708 2 connected 5461-10922

14492903ed96cef3d9e58b52d5affd27ba88edf0 211.69.238.105:6381@16381 myself,master - 0 1654606868000 1 connected 0-5460

2

3

4

5

6

7

slave 后面跟的id就是这个机器的master的id

它们的关系是:

- 6381(m)->6386(c)

- 6382(m)->6384(c)

- 6383(m)->6385(c)

这个主从关系是随机分配的,并不是指定顺序的

# 测试

在结点1使用set命令进行存储测试,发现以下问题

127.0.0.1:6381> set k1 v1

(error) MOVED 12706 211.69.238.105:6383

127.0.0.1:6381> set k2 v2

OK

127.0.0.1:6381> set k3 v3

OK

127.0.0.1:6381> set k4 v4

(error) MOVED 8455 211.69.238.105:6382

127.0.0.1:6381>

2

3

4

5

6

7

8

9

因为我们仍然使用的是

redis-cli -p 63811命令进入的第一个结点,而存的时候,结点1能接受的哈希槽在0-5460之间,上面两个8455、12706都是超过了5460,所以储存失败

所以我们使用下面的命令,使用集群连接

redis-cli -p 6381 -c

现在重新set k1和k4,就成功了,它们都被存到了别的结点,且存入以后,直接跳到了对应结点中

root@localhost:/data# redis-cli -p 6381 -c

127.0.0.1:6381> set k1 v1

-> Redirected to slot [12706] located at 211.69.238.105:6383

OK

211.69.238.105:6383> set k4 v4 #变成了结点3

-> Redirected to slot [8455] located at 211.69.238.105:6382

OK

211.69.238.105:6382> #变到了结点2

2

3

4

5

6

7

8

# 查看集群信息

redis-cli --cluster check 211.69.238.105:6381

root@localhost:/data# redis-cli --cluster check 211.69.238.105:6381

211.69.238.105:6381 (14492903...) -> 2 keys | 5461 slots | 1 slaves.

211.69.238.105:6383 (ec222c92...) -> 1 keys | 5461 slots | 1 slaves.

211.69.238.105:6382 (99b74bdf...) -> 1 keys | 5462 slots | 1 slaves.

[OK] 4 keys in 3 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 211.69.238.105:6381)

M: 14492903ed96cef3d9e58b52d5affd27ba88edf0 211.69.238.105:6381

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: ea4b2fd2676945eba8a283b75e9ba6c58043d75b 211.69.238.105:6386

slots: (0 slots) slave

replicates 14492903ed96cef3d9e58b52d5affd27ba88edf0

S: d62be6d855c50b9c3bb2216230382e4e2b594622 211.69.238.105:6385

slots: (0 slots) slave

replicates ec222c92f9ba2d11e1be3726981a9a66327cb13a

M: ec222c92f9ba2d11e1be3726981a9a66327cb13a 211.69.238.105:6383

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 5ff81b524030e5a58aa53802aa35f6e43e7eef6c 211.69.238.105:6384

slots: (0 slots) slave

replicates 99b74bdf77893a6353e4f852de3b35e2550e93ed

M: 99b74bdf77893a6353e4f852de3b35e2550e93ed 211.69.238.105:6382

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

root@localhost:/data#

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

# 主从迁移案例

# 测试node1宕机,cluster切换

停掉node1

docker kill redis-node-1

进入node2查看集群状态

[root@localhost /]# docker exec -it redis-node-2 /bin/bash

root@localhost:/data# redis-cli -p 6382 -c

127.0.0.1:6382> cluster nodes

99b74bdf77893a6353e4f852de3b35e2550e93ed 211.69.238.105:6382@16382 myself,master - 0 1654678370000 2 connected 5461-10922

14492903ed96cef3d9e58b52d5affd27ba88edf0 211.69.238.105:6381@16381 master,fail - 1654678348557 1654678341000 1 disconnected

ea4b2fd2676945eba8a283b75e9ba6c58043d75b 211.69.238.105:6386@16386 master - 0 1654678369000 7 connected 0-5460

d62be6d855c50b9c3bb2216230382e4e2b594622 211.69.238.105:6385@16385 slave ec222c92f9ba2d11e1be3726981a9a66327cb13a 0 1654678369152 3 connecte

d

5ff81b524030e5a58aa53802aa35f6e43e7eef6c 211.69.238.105:6384@16384 slave 99b74bdf77893a6353e4f852de3b35e2550e93ed 0 1654678371197 2 connecte

d

ec222c92f9ba2d11e1be3726981a9a66327cb13a 211.69.238.105:6383@16383 master - 0 1654678370175 3 connected 10923-16383

127.0.0.1:6382>

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

可以发现,与node1对应的cluster node6,端口6386的结点,已经替代node1成为了master

# 恢复node1发生的变化

重新启动

docker start redis-node-1

在node2查看集群状态

[root@localhost /]# docker exec -it redis-node-2 /bin/bash

root@localhost:/data# redis-cli -p 6382 -c

127.0.0.1:6382> cluster nodes

99b74bdf77893a6353e4f852de3b35e2550e93ed 211.69.238.105:6382@16382 myself,master - 0 1654678791000 2 connected 5461-10922

14492903ed96cef3d9e58b52d5affd27ba88edf0 211.69.238.105:6381@16381 slave ea4b2fd2676945eba8a283b75e9ba6c58043d75b 0 1654678792000 7 connected

ea4b2fd2676945eba8a283b75e9ba6c58043d75b 211.69.238.105:6386@16386 master - 0 1654678793000 7 connected 0-5460

d62be6d855c50b9c3bb2216230382e4e2b594622 211.69.238.105:6385@16385 slave ec222c92f9ba2d11e1be3726981a9a66327cb13a 0 1654678792000 3 connected

5ff81b524030e5a58aa53802aa35f6e43e7eef6c 211.69.238.105:6384@16384 slave 99b74bdf77893a6353e4f852de3b35e2550e93ed 0 1654678793957 2 connected

ec222c92f9ba2d11e1be3726981a9a66327cb13a 211.69.238.105:6383@16383 master - 0 1654678792938 3 connected 10923-16383

127.0.0.1:6382>

2

3

4

5

6

7

8

9

10

可以发现,重新启动node1后,它并没有重新成为之前的master,而是成为了node6的slave

停掉master node3

docker kill redis-node-3

发现node5取代node3从slave成为了master

127.0.0.1:6382> cluster nodes

99b74bdf77893a6353e4f852de3b35e2550e93ed 211.69.238.105:6382@16382 myself,master - 0 1654679218000 2 connected 5461-10922

14492903ed96cef3d9e58b52d5affd27ba88edf0 211.69.238.105:6381@16381 slave ea4b2fd2676945eba8a283b75e9ba6c58043d75b 0 1654679219000 7 connected

ea4b2fd2676945eba8a283b75e9ba6c58043d75b 211.69.238.105:6386@16386 master - 0 1654679221488 7 connected 0-5460

d62be6d855c50b9c3bb2216230382e4e2b594622 211.69.238.105:6385@16385 master - 0 1654679220431 8 connected 10923-16383

5ff81b524030e5a58aa53802aa35f6e43e7eef6c 211.69.238.105:6384@16384 slave 99b74bdf77893a6353e4f852de3b35e2550e93ed 0 1654679220000 2 connected

ec222c92f9ba2d11e1be3726981a9a66327cb13a 211.69.238.105:6383@16383 master,fail - 1654679206127 1654679203000 3 disconnected

127.0.0.1:6382>

2

3

4

5

6

7

8

总结

redis主从容错切换迁移是有一套自己的规则的,当一个master宕机后,它的slave就会成为新的master,当它重新启动,它并不会又取代master的位置,而是成为新的master的slave

# 主从扩容

# 启动新的两个结点

启动两个新的结点,一主(6387)一从(6388)

docker run -d --name redis-node-7 --net host --privileged=true -v /data/redis/share/redis-node-7:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6387

docker run -d --name redis-node-8 --net host --privileged=true -v /data/redis/share/redis-node-8:/data redis:6.0.8 --cluster-enabled yes --appendonly yes --port 6388

2

3

# 将6387(空槽号)作为master加入到原集群

[root@localhost redis]# docker exec -it redis-node-7 /bin/bash

root@localhost:/data# redis-cli --cluster add-node 211.69.238.105:6387 211.69.238.105:6381

>>> Adding node 211.69.238.105:6387 to cluster 211.69.238.105:6381

Could not connect to Redis at 211.69.238.105:6383: Connection refused

>>> Performing Cluster Check (using node 211.69.238.105:6381)

S: 14492903ed96cef3d9e58b52d5affd27ba88edf0 211.69.238.105:6381

slots: (0 slots) slave

replicates ea4b2fd2676945eba8a283b75e9ba6c58043d75b

M: d62be6d855c50b9c3bb2216230382e4e2b594622 211.69.238.105:6385

slots:[10923-16383] (5461 slots) master

S: 5ff81b524030e5a58aa53802aa35f6e43e7eef6c 211.69.238.105:6384

slots: (0 slots) slave

replicates 99b74bdf77893a6353e4f852de3b35e2550e93ed

M: ea4b2fd2676945eba8a283b75e9ba6c58043d75b 211.69.238.105:6386

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: 99b74bdf77893a6353e4f852de3b35e2550e93ed 211.69.238.105:6382

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 211.69.238.105:6387 to make it join the cluster.

[OK] New node added correctly.

root@localhost:/data#

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

redis-cli --cluster add-node 211.69.238.105:6387 211.69.238.105:63811命令中的add-node后的第一个参数就是要添加的结点,第二个参数就是原来集群结点里面的

# 检查集群的情况

root@localhost:/data# redis-cli --cluster check 211.69.238.105:6381

Could not connect to Redis at 211.69.238.105:6383: Connection refused

211.69.238.105:6385 (d62be6d8...) -> 1 keys | 5461 slots | 0 slaves.

211.69.238.105:6386 (ea4b2fd2...) -> 2 keys | 5461 slots | 1 slaves.

211.69.238.105:6382 (99b74bdf...) -> 1 keys | 5462 slots | 1 slaves.

211.69.238.105:6387 (0eccebbb...) -> 0 keys | 0 slots | 0 slaves. #现在没有槽位和数据

[OK] 4 keys in 4 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 211.69.238.105:6381)

S: 14492903ed96cef3d9e58b52d5affd27ba88edf0 211.69.238.105:6381

slots: (0 slots) slave

replicates ea4b2fd2676945eba8a283b75e9ba6c58043d75b

M: d62be6d855c50b9c3bb2216230382e4e2b594622 211.69.238.105:6385

slots:[10923-16383] (5461 slots) master

S: 5ff81b524030e5a58aa53802aa35f6e43e7eef6c 211.69.238.105:6384

slots: (0 slots) slave

replicates 99b74bdf77893a6353e4f852de3b35e2550e93ed

M: ea4b2fd2676945eba8a283b75e9ba6c58043d75b 211.69.238.105:6386

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: 99b74bdf77893a6353e4f852de3b35e2550e93ed 211.69.238.105:6382

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

M: 0eccebbb994c94eb0f722ba17a4dbf3c3656345e 211.69.238.105:6387

slots: (0 slots) master

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

root@localhost:/data#

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

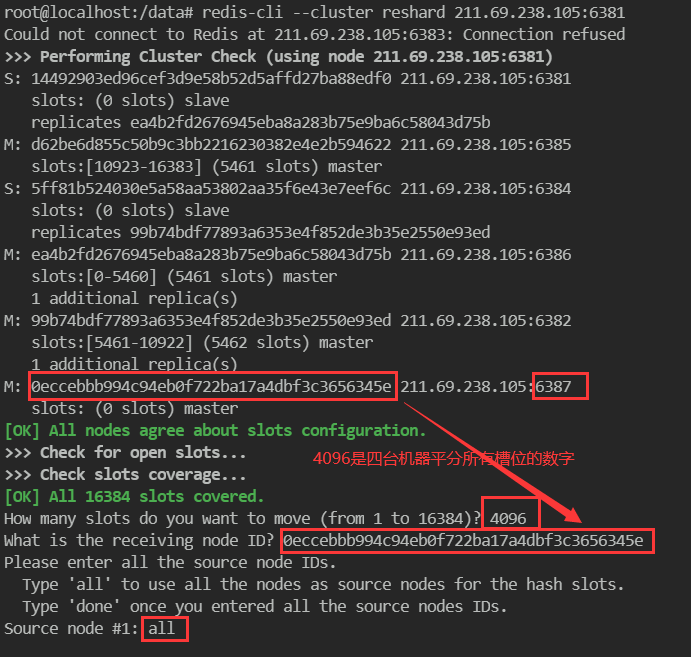

# 重新分配槽号

root@localhost:/data# redis-cli --cluster reshard 211.69.238.105:6381

- 这里会先让你选择重新分配后每个机器的槽位大小,直接用总的16,384/台数4,得到4096

- 然后选择分配的结点的id,即新加入的结点6387

- 选择all

- 最后等待槽位分配完成即可,时间不短

# 检查重新分配后的槽位情况

redis-cli --cluster check 211.69.238.105:6381

root@localhost:/data# redis-cli --cluster check 211.69.238.105:6381

Could not connect to Redis at 211.69.238.105:6383: Connection refused

211.69.238.105:6385 (d62be6d8...) -> 1 keys | 4096 slots | 0 slaves.

211.69.238.105:6386 (ea4b2fd2...) -> 1 keys | 4096 slots | 1 slaves.

211.69.238.105:6382 (99b74bdf...) -> 1 keys | 4096 slots | 1 slaves.

211.69.238.105:6387 (0eccebbb...) -> 1 keys | 4096 slots | 0 slaves.

[OK] 4 keys in 4 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 211.69.238.105:6381)

S: 14492903ed96cef3d9e58b52d5affd27ba88edf0 211.69.238.105:6381

slots: (0 slots) slave

replicates ea4b2fd2676945eba8a283b75e9ba6c58043d75b

M: d62be6d855c50b9c3bb2216230382e4e2b594622 211.69.238.105:6385

slots:[12288-16383] (4096 slots) master

S: 5ff81b524030e5a58aa53802aa35f6e43e7eef6c 211.69.238.105:6384

slots: (0 slots) slave

replicates 99b74bdf77893a6353e4f852de3b35e2550e93ed

M: ea4b2fd2676945eba8a283b75e9ba6c58043d75b 211.69.238.105:6386

slots:[1365-5460] (4096 slots) master

1 additional replica(s)

M: 99b74bdf77893a6353e4f852de3b35e2550e93ed 211.69.238.105:6382

slots:[6827-10922] (4096 slots) master

1 additional replica(s)

M: 0eccebbb994c94eb0f722ba17a4dbf3c3656345e 211.69.238.105:6387

slots:[0-1364],[5461-6826],[10923-12287] (4096 slots) master

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

可以看到,新的master的槽位,都是从原来的三个master的槽位中,从它们的开始槽位,都匀出4096/3个槽位给新的master

M: 0eccebbb994c94eb0f722ba17a4dbf3c3656345e 211.69.238.105:6387 slots:[0-1364],[5461-6826],[10923-12287] (4096 slots) master1

2

# 为新的master添加slave

redis-cli --cluster add-node ip:新slave端口 ip:新master端口 --cluster-slave --cluster-master-id 新主机结点id(6387的id)

redis-cli --cluster add-node 211.69.238.105:6388 211.69.238.105:6387 --cluster-slave --cluster-master-id 0eccebbb994c94eb0f722ba17a4dbf3c3656345e

2

3

执行成功

>>> Adding node 211.69.238.105:6388 to cluster 211.69.238.105:6387

Could not connect to Redis at 211.69.238.105:6383: Connection refused

>>> Performing Cluster Check (using node 211.69.238.105:6387)

M: 0eccebbb994c94eb0f722ba17a4dbf3c3656345e 211.69.238.105:6387

slots:[0-1364],[5461-6826],[10923-12287] (4096 slots) master

S: 14492903ed96cef3d9e58b52d5affd27ba88edf0 211.69.238.105:6381

slots: (0 slots) slave

replicates ea4b2fd2676945eba8a283b75e9ba6c58043d75b

M: d62be6d855c50b9c3bb2216230382e4e2b594622 211.69.238.105:6385

slots:[12288-16383] (4096 slots) master

S: 5ff81b524030e5a58aa53802aa35f6e43e7eef6c 211.69.238.105:6384

slots: (0 slots) slave

replicates 99b74bdf77893a6353e4f852de3b35e2550e93ed

M: 99b74bdf77893a6353e4f852de3b35e2550e93ed 211.69.238.105:6382

slots:[6827-10922] (4096 slots) master

1 additional replica(s)

M: ea4b2fd2676945eba8a283b75e9ba6c58043d75b 211.69.238.105:6386

slots:[1365-5460] (4096 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 211.69.238.105:6388 to make it join the cluster.

Waiting for the cluster to join

>>> Configure node as replica of 211.69.238.105:6387.

[OK] New node added correctly.

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

检查现在的集群状态,发现4主4从已完成(前面停了以后忘记将node3重新启动了)

root@localhost:/data# redis-cli --cluster check 211.69.238.105:6382

211.69.238.105:6382 (99b74bdf...) -> 1 keys | 4096 slots | 1 slaves.

211.69.238.105:6386 (ea4b2fd2...) -> 1 keys | 4096 slots | 1 slaves.

211.69.238.105:6387 (0eccebbb...) -> 1 keys | 4096 slots | 1 slaves.

211.69.238.105:6385 (d62be6d8...) -> 1 keys | 4096 slots | 1 slaves.

[OK] 4 keys in 4 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 211.69.238.105:6382)

M: 99b74bdf77893a6353e4f852de3b35e2550e93ed 211.69.238.105:6382

slots:[6827-10922] (4096 slots) master

1 additional replica(s)

S: 14492903ed96cef3d9e58b52d5affd27ba88edf0 211.69.238.105:6381

slots: (0 slots) slave

replicates ea4b2fd2676945eba8a283b75e9ba6c58043d75b

M: ea4b2fd2676945eba8a283b75e9ba6c58043d75b 211.69.238.105:6386

slots:[1365-5460] (4096 slots) master

1 additional replica(s)

M: 0eccebbb994c94eb0f722ba17a4dbf3c3656345e 211.69.238.105:6387

slots:[0-1364],[5461-6826],[10923-12287] (4096 slots) master

1 additional replica(s)

M: d62be6d855c50b9c3bb2216230382e4e2b594622 211.69.238.105:6385

slots:[12288-16383] (4096 slots) master

1 additional replica(s)

S: 5ff81b524030e5a58aa53802aa35f6e43e7eef6c 211.69.238.105:6384

slots: (0 slots) slave

replicates 99b74bdf77893a6353e4f852de3b35e2550e93ed

S: ec222c92f9ba2d11e1be3726981a9a66327cb13a 211.69.238.105:6383

slots: (0 slots) slave

replicates 0eccebbb994c94eb0f722ba17a4dbf3c3656345e

S: 653836c52a8aec92d910548f30853350facda255 211.69.238.105:6388

slots: (0 slots) slave

replicates d62be6d855c50b9c3bb2216230382e4e2b594622

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

# 主从缩容

目的:删除6387和6388,恢复3主3从

- 先清除slave结点6388

- 清出来的槽号重新分配

- 再清除master结点6387

- 恢复到3主3从

# 1、删除从机

redis-cli --cluster del-node ip:从机端口 从机6388节点ID

redis-cli --cluster del-node 211.69.238.105:6388 653836c52a8aec92d910548f30853350facda255

2

3

删除成功

root@localhost:/data# redis-cli --cluster del-node 211.69.238.105:6388 653836c52a8aec92d910548f30853350facda255

>>> Removing node 653836c52a8aec92d910548f30853350facda255 from cluster 211.69.238.105:6388

>>> Sending CLUSTER FORGET messages to the cluster...

>>> Sending CLUSTER RESET SOFT to the deleted node.

2

3

4

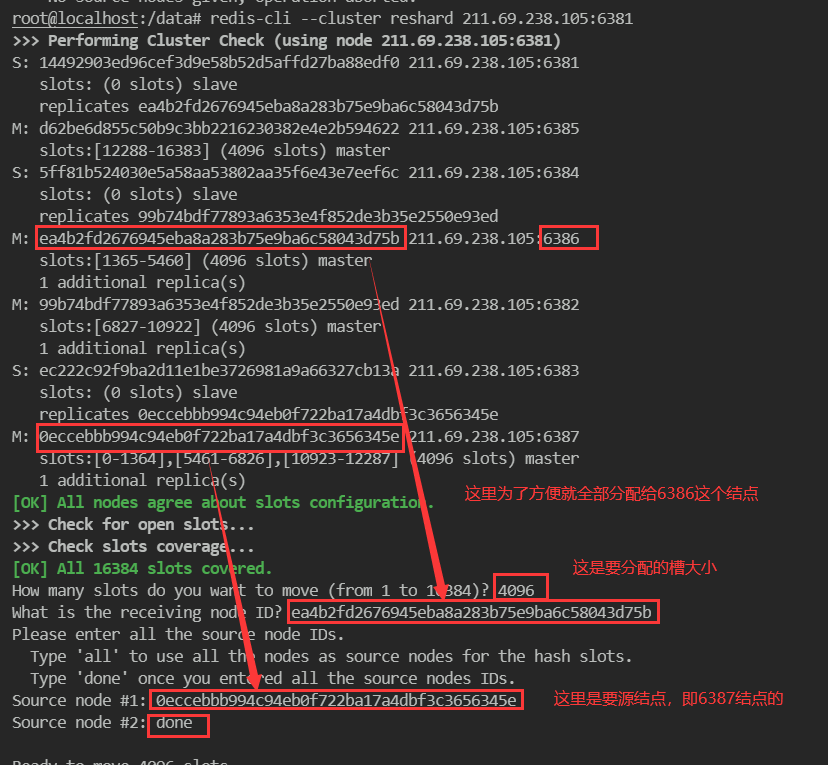

# 2、重新分配槽号

redis-cli --cluster reshard 211.69.238.105:6381

检查集群信息发下,6387的槽位已经全部移动到了6386下

# 3、将6387从集群中删除

redis-cli --cluster del-node 211.69.238.105:6387 0eccebbb994c94eb0f722ba17a4dbf3c3656345e

root@localhost:/data# redis-cli --cluster del-node 211.69.238.105:6387 0eccebbb994c94eb0f722ba17a4dbf3c3656345e

>>> Removing node 0eccebbb994c94eb0f722ba17a4dbf3c3656345e from cluster 211.69.238.105:6387

>>> Sending CLUSTER FORGET messages to the cluster...

>>> Sending CLUSTER RESET SOFT to the deleted node.

2

3

4

5

6

再查看下集群状况

root@localhost:/data# redis-cli --cluster check 211.69.238.105:6381

211.69.238.105:6385 (d62be6d8...) -> 1 keys | 4096 slots | 0 slaves.

211.69.238.105:6386 (ea4b2fd2...) -> 2 keys | 8192 slots | 2 slaves.

211.69.238.105:6382 (99b74bdf...) -> 1 keys | 4096 slots | 1 slaves.

[OK] 4 keys in 3 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 211.69.238.105:6381)

S: 14492903ed96cef3d9e58b52d5affd27ba88edf0 211.69.238.105:6381

slots: (0 slots) slave

replicates ea4b2fd2676945eba8a283b75e9ba6c58043d75b

M: d62be6d855c50b9c3bb2216230382e4e2b594622 211.69.238.105:6385

slots:[12288-16383] (4096 slots) master

S: 5ff81b524030e5a58aa53802aa35f6e43e7eef6c 211.69.238.105:6384

slots: (0 slots) slave

replicates 99b74bdf77893a6353e4f852de3b35e2550e93ed

M: ea4b2fd2676945eba8a283b75e9ba6c58043d75b 211.69.238.105:6386

slots:[0-6826],[10923-12287] (8192 slots) master

2 additional replica(s)

M: 99b74bdf77893a6353e4f852de3b35e2550e93ed 211.69.238.105:6382

slots:[6827-10922] (4096 slots) master

1 additional replica(s)

S: ec222c92f9ba2d11e1be3726981a9a66327cb13a 211.69.238.105:6383

slots: (0 slots) slave

replicates ea4b2fd2676945eba8a283b75e9ba6c58043d75b

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

已回到3主3从状态