Seata

Seata

Table of Contents generated with DocToc (opens new window)

# Seata

# 分布式事务问题

单体应用被拆分成微服务应用,原来的N个模块被拆分成N个独立的应用,分别使用N个独立的数据源, 业务操作需要调用N个服务来完成。此时每个服务内部的数据一致性由本地事务来保证,但是全局的数据一致性问题没法保证。

一次业务操作需要跨多个数据源或需要跨多个系统进行远程调用,就会产生分布式事务问题

# Seata简介

官网:http://seata.io/zh-cn/

# 一个典型的分布式事务过程

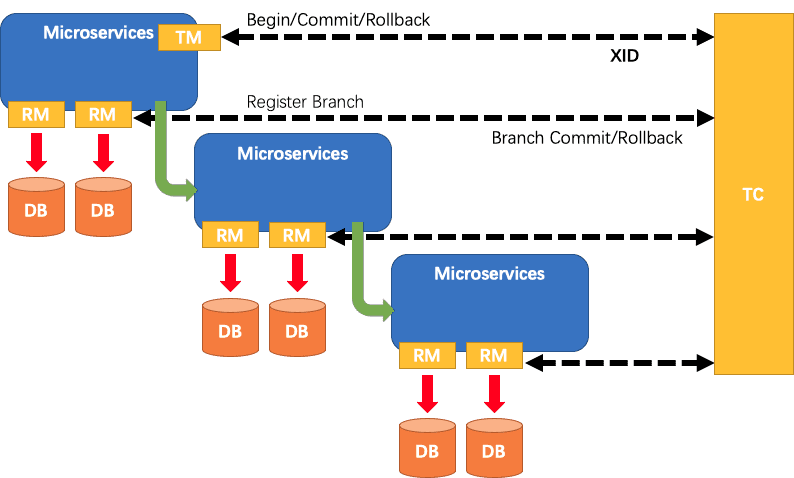

# 一ID+三组件模型

一ID:

- Transaction ID:XID,全局唯一的事务ID

三组件:

- Transaction Coordinator(TC):事务协调器,维护全局和分支事务的状态,驱动全局事务提交或回滚。

- Transaction Manager(TM):定义全局事务的范围:负责开始全局事务、并最终发起全局提交或回滚全局事务。

- Resource Manager(RM):管理分支事务处理的资源,负责分支注册、状态汇报,并接收事务协调器的指令,驱动分支(本地)事务的提交和回滚

# 处理过程

- TM向TC申请开启一个全局事务,全局事务创建成功并生成一个全局唯一的XID;

- XID在微服务调用链路的上下文中传播;

- RM向TC注册分支事务,将其纳入XID对应全局事务的管辖;

- TM向TC发起针对XID的全局提交或回滚决议;

- TC调度XID下管辖的全部分支事务完成提交或回滚请求。

# Seata-Server安装

在GitHub下载release版本,我这里是最新的v1.5.2版本

修改application.yml配置文件

增加以下配置,将其配置中心、注册中心改为nacos,存储mode改为db-MySQL

seata:

config:

# support: nacos, consul, apollo, zk, etcd3

type: nacos

registry:

# support: nacos, eureka, redis, zk, consul, etcd3, sofa

type: nacos

store:

# support: file 、 db 、 redis

mode: db

db:

driverClassName: com.mysql.cj.jdbc.Driver

url: jdbc:mysql://localhost:3306/seata_temp?useUnicode=true&characterEncoding=utf-8&useSSL=false&serverTimezone=GMT%2B8

user: root

password: root

2

3

4

5

6

7

8

9

10

11

12

13

14

15

- 在库中新建表,表的SQL文件在seata\script\server\db目录下,mysql.sql

- 先运行nacos,再到seata的bin目录中运行

seata-server.bat文件 - 访问http://localhost:7091进入Seata控制台,账号密码均为

seata

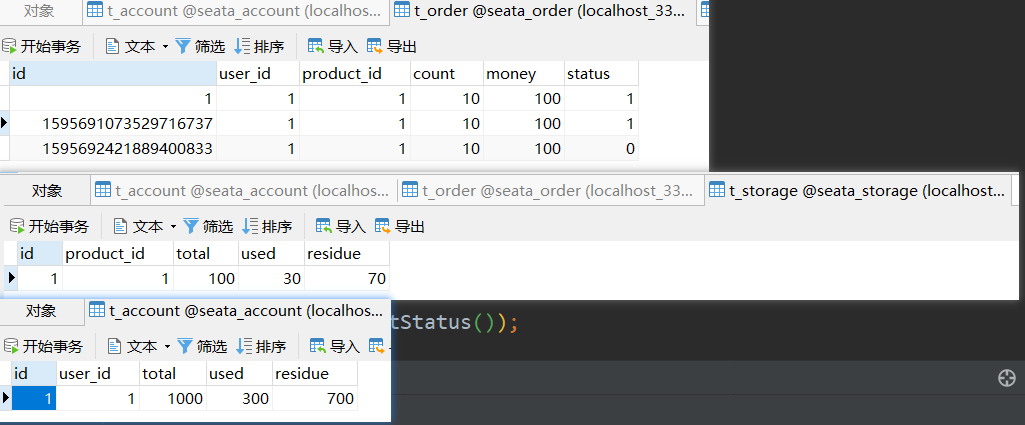

# 订单/库存/账户业务数据库准备

这里我们会创建三个服务,一个订单服务,一个库存服务,一个账户服务。

当用户下单时,会在订单服务中创建一个订单,然后通过远程调用库桧服务来扣减下单商品的库存,再通过远程调用账户服务来扣减用户账户里面的余额, 最后在订单服务中修改订单状态为已完成。

该操作跨越三个数据库,有两次远程调用,很明显会有分布式事务问题。

即:下订单---扣库存---减余额

# 建库建表SQL

# seata_order

CREATE DATABASE seata_order;

USE seata_order;

CREATE TABLE t_order(

id BIGINT(11) NOT NULL AUTO_INCREMENT PRIMARY KEY ,

user_id BIGINT(11) DEFAULT NULL COMMENT '用户id',

product_id BIGINT(11) DEFAULT NULL COMMENT '产品id',

count INT(11) DEFAULT NULL COMMENT '数量',

money DECIMAL(11,0) DEFAULT NULL COMMENT '金额',

status INT(1) DEFAULT NULL COMMENT '订单状态:0创建中,1已完结'

)ENGINE=InnoDB AUTO_INCREMENT=7 CHARSET=utf8;

# seata_storage

CREATE DATABASE seata_storage;

USE seata_storage;

CREATE TABLE t_storage(

id BIGINT(11) NOT NULL AUTO_INCREMENT PRIMARY KEY ,

product_id BIGINT(11) DEFAULT NULL COMMENT '产品id',

total INT(11) DEFAULT NULL COMMENT '总库存',

used INT(11) DEFAULT NULL COMMENT '已用库存',

residue INT(11) DEFAULT NULL COMMENT '剩余库存'

)ENGINE=InnoDB AUTO_INCREMENT=7 CHARSET=utf8;

INSERT INTO t_storage(id, product_id, total, used, residue) VALUES(1,1,100,0,100);

# seata_account

CREATE DATABASE seata_account;

USE seata_account;

CREATE TABLE t_account(

id BIGINT(11) NOT NULL AUTO_INCREMENT PRIMARY KEY ,

user_id BIGINT(11) DEFAULT NULL COMMENT '用户id',

total DECIMAL(10,0) DEFAULT NULL COMMENT '总额度',

used DECIMAL(10,0) DEFAULT NULL COMMENT '已用额度',

residue DECIMAL(10,0) DEFAULT 0 COMMENT '剩余可用额度'

)ENGINE=InnoDB AUTO_INCREMENT=7 CHARSET=utf8;

INSERT INTO t_account(id, user_id, total, used, residue) VALUES(1,1,1000,0,1000);

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

# 按三个库都建立一个回滚日志表

CREATE TABLE `undo_log` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`branch_id` bigint(20) NOT NULL,

`xid` varchar(100) NOT NULL,

`context` varchar(128) NOT NULL,

`rollback_info` longblob NOT NULL,

`log_status` int(11) NOT NULL,

`log_created` datetime NOT NULL,

`log_modified` datetime NOT NULL,

`ext` varchar(100) DEFAULT NULL,

PRIMARY KEY (`id`),

UNIQUE KEY `ux_undo_log` (`xid`,`branch_id`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

2

3

4

5

6

7

8

9

10

11

12

13

# 订单/库存/账户业务微服务准备

业务需求很简单:下订单---扣库存---减余额----改(订单)状态

这里需要三个微服务

- 订单:Order-Module-- seata-order-service2001

- 库存:Storage-Module seata-storage-service2002

- 账户:Account-Module seata-account-service2003

# Order

# pom

nacos、openfeign、seata、web、actuator、mysql、mybatis这些常规的

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<!-- nacos依赖 -->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-nacos-discovery</artifactId>

</dependency>

<!-- openfeign依赖 -->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-openfeign</artifactId>

</dependency>

<!-- ribbon依赖 -->

<!-- loadbalancer依赖 -->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-loadbalancer</artifactId>

</dependency>

<!-- seata依赖 -->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-seata</artifactId>

</dependency>

<!-- 下面是数据库相关依赖 -->

<dependency>

<groupId>com.baomidou</groupId>

<artifactId>mybatis-plus-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid-spring-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

</dependency>

</dependencies>

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

# yaml

server:

port: 2001

spring:

application:

name: seata-order-service

cloud:

nacos:

discovery:

server-addr: localhost:8848

alibaba:

seata:

tx-service-group: my_seata

datasource:

# 当前数据源类型

type: com.alibaba.druid.pool.DruidDataSource

driver-class-name: com.mysql.cj.jdbc.Driver

url: jdbc:mysql://localhost:3306/seata_order?useUnicode=true&characterEncoding=utf-8&useSSL=false&serverTimezone=GMT%2B8

username: root

password: root

mybatis-plus:

mapper-locations: classpath:/mapper/*.xml

type-aliases-package: com.zdk.springcloud.alibaba.domain

logging:

level:

io:

seata: info

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

# file.conf

这个文件要注意这个部分的配置:

ervice { #vgroup->rgroup vgroupMapping.my_seata = "my_seata" #only support single node my_seata.grouplist = "127.0.0.1:8091"

vgroupMapping.my_seata的my_seata要与我们yaml中配置的tx-service-group值相同,然后引号中的值与my_seata.grouplist中的前一部分相同

否则会启动报错,找不到seata server

transport {

# tcp udt unix-domain-socket

type = "TCP"

#NIO NATIVE

server = "NIO"

#enable heartbeat

heartbeat = true

#thread factory for netty

thread-factory {

boss-thread-prefix = "NettyBoss"

worker-thread-prefix = "NettyServerNIOWorker"

server-executor-thread-prefix = "NettyServerBizHandler"

share-boss-worker = false

client-selector-thread-prefix = "NettyClientSelector"

client-selector-thread-size = 1

client-worker-thread-prefix = "NettyClientWorkerThread"

# netty boss thread size,will not be used for UDT

boss-thread-size = 1

#auto default pin or 8

worker-thread-size = 8

}

shutdown {

# when destroy server, wait seconds

wait = 3

}

serialization = "seata"

compressor = "none"

}

service {

#vgroup->rgroup

vgroupMapping.my_seata = "my_seata"

#only support single node

my_seata.grouplist = "127.0.0.1:8091"

#degrade current not support

enableDegrade = false

#disable

disable = false

#unit ms,s,m,h,d represents milliseconds, seconds, minutes, hours, days, default permanent

max.commit.retry.timeout = "-1"

max.rollback.retry.timeout = "-1"

}

client {

async.commit.buffer.limit = 10000

lock {

retry.internal = 10

retry.times = 30

}

report.retry.count = 5

}

## transaction log store

store {

## store mode: file、db

mode = "file"

## file store

file {

dir = "sessionStore"

# branch session size , if exceeded first try compress lockkey, still exceeded throws exceptions

max-branch-session-size = 16384

# globe session size , if exceeded throws exceptions

max-global-session-size = 512

# file buffer size , if exceeded allocate new buffer

file-write-buffer-cache-size = 16384

# when recover batch read size

session.reload.read_size = 100

# async, sync

flush-disk-mode = async

}

## database store

db {

## the implement of javax.sql.DataSource, such as DruidDataSource(druid)/BasicDataSource(dbcp) etc.

datasource = "druid"

## mysql/oracle/h2/oceanbase etc.

db-type = "mysql"

url = "jdbc:mysql://localhost:3306/seata_temp?useUnicode=true&characterEncoding=utf-8&useSSL=false&serverTimezone=GMT%2B8"

user = "root"

password = "root"

min-conn = 1

max-conn = 3

global.table = "global_table"

branch.table = "branch_table"

lock-table = "lock_table"

query-limit = 100

}

}

lock {

## the lock store mode: local、remote

mode = "remote"

local {

## store locks in user's database

}

remote {

## store locks in the seata's server

}

}

recovery {

committing-retry-delay = 30

asyn-committing-retry-delay = 30

rollbacking-retry-delay = 30

timeout-retry-delay = 30

}

transaction {

undo.data.validation = true

undo.log.serialization = "jackson"

}

## metrics settings

metrics {

enabled = false

registry-type = "compact"

# multi exporters use comma divided

exporter-list = "prometheus"

exporter-prometheus-port = 9898

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

# registry.conf

registry {

# file 、nacos 、eureka、redis、zk、consul、etcd3、sofa

type = "nacos"

nacos {

serverAddr = "localhost"

namespace = "public"

cluster = "default"

}

eureka {

serviceUrl = "http://localhost:8761/eureka"

application = "default"

weight = "1"

}

redis {

serverAddr = "localhost:6379"

db = "0"

}

zk {

cluster = "default"

serverAddr = "127.0.0.1:2181"

session.timeout = 6000

connect.timeout = 2000

}

consul {

cluster = "default"

serverAddr = "127.0.0.1:8500"

}

etcd3 {

cluster = "default"

serverAddr = "http://localhost:2379"

}

sofa {

serverAddr = "127.0.0.1:9603"

application = "default"

region = "DEFAULT_ZONE"

datacenter = "DefaultDataCenter"

cluster = "default"

group = "SEATA_GROUP"

addressWaitTime = "3000"

}

file {

name = "file.conf"

}

}

config {

# file、nacos 、apollo、zk、consul、etcd3

type = "nacos"

nacos {

serverAddr = "localhost"

namespace = "public"

cluster = "default"

}

consul {

serverAddr = "127.0.0.1:8500"

}

apollo {

app.id = "seata-server"

apollo.meta = "http://192.168.1.204:8801"

}

zk {

serverAddr = "127.0.0.1:2181"

session.timeout = 6000

connect.timeout = 2000

}

etcd3 {

serverAddr = "http://localhost:2379"

}

file {

name = "file.conf"

}

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

注意,以上两个conf文件都要放到resource目录下

余下的工程就不再写在笔记上了,参考GitHub仓库 (opens new window)或Gitee仓库 (opens new window)

# 测试

访问POST请求:http://localhost:2001/order/create

参数为:

{ "userId":1, "productId":1, "count":10, "money":100, "status":0 }1

2

3

4

5

6

7

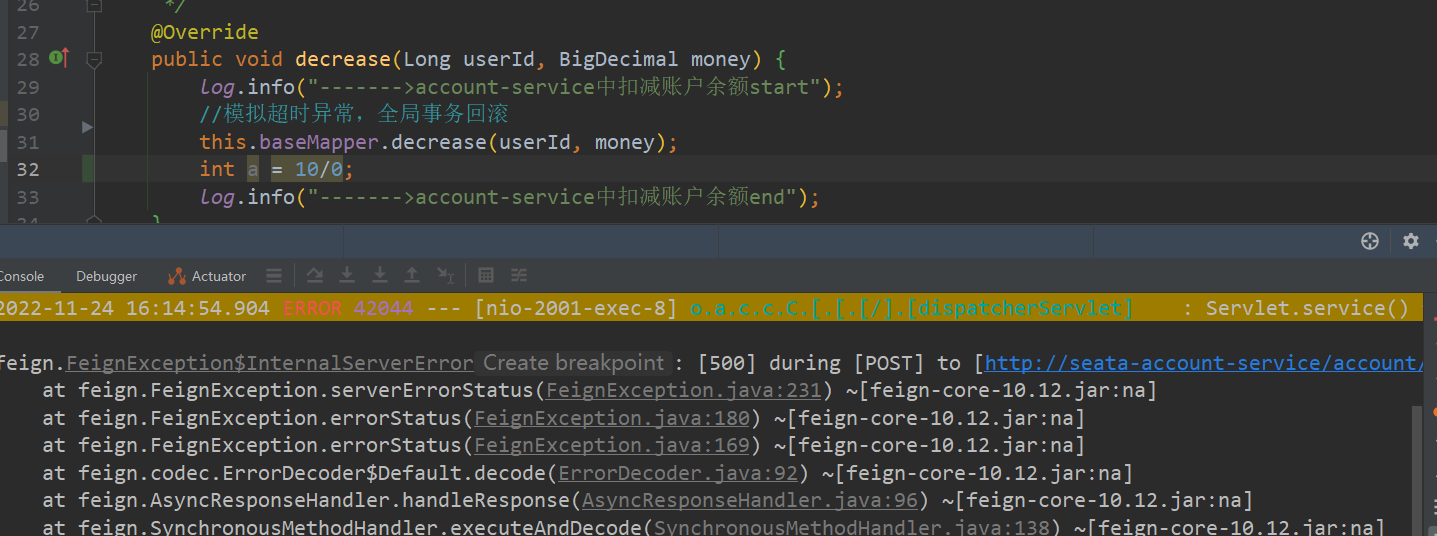

# 出异常,没加@GlobalTransactional

让AccountServiceImpl模拟超时异常

此时db的情况是,order创建成功,storage也成功扣了,但account也扣了,但是订单状态并没有修改,因为在执行修改前出现了异常,而我们的期望是前面的三个库的操作都不执行,一起回滚

# 出异常,添加@GlobalTransactional

我们添加上注解

@GlobalTransactional(name = "my_seata", rollbackFor = Exception.class)

重启OrderService再次访问创建订单接口,发现所有数据均未发生变化,回滚已执行